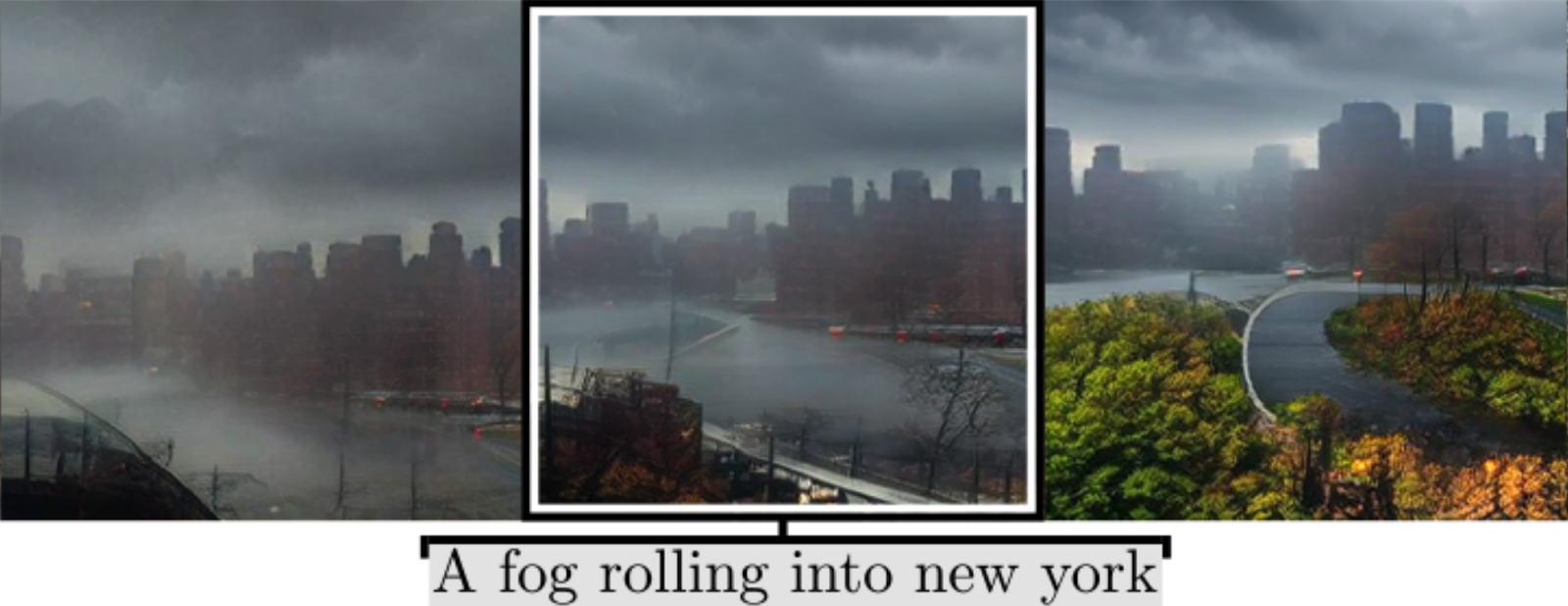

An example of text-to-image generation by StyleGAN-T. (Source: https://arxiv.org/pdf/2301.09515.pdf)

What is StyleGAN-T?

StyleGAN-T is a text-to-image generation model based on the architecture of the Generative Adversarial Network (GAN). GAN models were obsolete with the arrival of diffusion models into the picture generation space until StyleGAN-T was released in January 2023.

Typical text-to-picture creation methods need several assessment stages to produce a single image. The GAN architecture, on the other hand, requires just one forward pass and is thus far more efficient. While older GAN models were efficient, they did not outperform more contemporary text-to-image generation models.

StyleGAN-T brought the benefit of efficiency and high performance to the table, competing with more recent diffusion-based devices.

History of StyleGAN

Several versions of StyleGAN have been released during the last five years. The architecture has improved incrementally with each iteration. The timeline below outlines the evolution of StyleGAN over the years.

StyleGAN (Dec 2018)

StyleGAN was a pioneering GAN model produced by a team of NVIDIA researchers in December 2018. StyleGAN was created to generate realistic photos while altering and customising specific image characteristics or styles. Colour, texture, stance, and other qualities linked with the created photographs might be examples of these styles.

StyleGAN begins by taking a random vector as input. This vector is converted into a style vector, which represents many characteristics of the image's style and look. The style vector is then used by the generator network to produce a picture. The discriminator network determines if the created picture is genuine or fabricated.

When StyleGAN is trained, the generator and discriminator work together to outwit each other. The generator attempts to produce pictures that will fool the discriminator, while the discriminator attempts to accurately distinguish between genuine and generated images.

The capacity to construct lifelike human faces was an intriguing and popular use of StyleGAN. The picture below depicts a variety of human faces that were created using StyleGAN and are not genuine.

None of the people in the above image are real. The images were generated by StyleGAN (Source: https://arxiv.org/pdf/1812.04948.pdf)

StyleGAN 2 (Feb 2020)

StyleGAN was well-liked. As usage increased, so did knowledge of StyleGAN's limitations. For example, consider the propensity of random blob forms to occur on created photographs. This inspired academics to work on improving the picture quality of produced images. This resulted in StyleGAN 2, a new variation of the StyleGAN concept.

StyleGAN 2 had a more complex generator design known as skip connection or skip connections with noise. During training, skip connections increase information flow and gradient propagation. Adding noise to skip connections resulted in higher-quality photos. StyleGAN 2 was also designed to be more sturdy throughout training.

StyleGAN 3 (June 2021)

StyleGAN 3 attempted to overcome the problem of "texture sticking" by employing an alias-free GAN architecture. Texture sticking occurs when a created picture contains a pattern that appears out of place regularly rather than producing a range of patterns. When morphing, the hair (on the left) appears to adhere to the screen in the animation below.

Generated hair where texture sticking is present generated by StyleGAN 2, left. Right, an image generated by StyleGAN 3. (Source: https://medium.com/@steinsfu/stylegan3-clearly-explained-793edbcc8048)

An alias-free architecture was used to combat texture sticking. Aliasing is a visual distortion or jaggedness caused by sampling an image or signal at a lower resolution than its original quality. Aliasing is similar to the pixelation you get when you zoom in too much on an image. To avoid the usage of aliasing, many adjustments were made to the StyleGAN model architecture.

StyleGAN XL (August 2022)

Previous versions of StyleGAN had shown outstanding results in creating face pictures. However, these models struggled to generate different pictures from datasets with a wide range of information. The researchers set out to work on StyleGAN XL in order to train StyleGAN3 on a large, high-resolution, unstructured dataset like ImageNet.

StyleGAN XL developed a new training technique to better manage this and boosted StyleGAN3's capacity to create high-quality pictures from huge and diverse datasets, bringing up new possibilities for image synthesis.

How does StyleGAN-T work?

A pre-trained CLIP text encoder is used to encode a text prompt, which captures the semantic meaning of the text in a high-dimensional vector form. Then, as a starting point for picture synthesis, a random latent vector (z) is created. The latent vector (z) is sent into a mapping network, which turns it into intermediate latent codes, allowing for further control and modification of the produced pictures.

The encoded text prompt is combined with the intermediate latent codes to create a fused representation that includes both the latent space and the textual information.

Overview of StyleGAN-T (Source: https://arxiv.org/pdf/2301.09515.pdf)

Affine transformations (a sort of geometric transformation) are employed on the fused representation to generate per-layer styles that modify the generator's synthesis layers. These styles (as seen above) influence the resulting image's aesthetic qualities such as colour, texture, and structure. The modulated synthesis layers are used by the generator to build a preliminary picture that corresponds to the provided text prompt.

The created picture is then sent via a CLIP image encoder, which converts it to a high-dimensional vector representation. It is determined the squared spherical distance between the encoded picture and the normalised text embedding. The similarity between the created picture and the input text conditioning is represented by this distance.

Images generated by StyleGAN-T (Source: https://arxiv.org/pdf/2301.09515.pdf)

The goal of training is to minimise the squared spherical distance, directing the produced distribution to produce pictures that match the textual description. A discriminator network with several heads evaluates both the produced and actual pictures.

Each discriminator head evaluates a different element of image quality and validity. The feedback from the discriminator and the computed distance loss are utilised to update both the generator and the discriminator using gradient-based optimisation methods like backpropagation.

The training procedure iterates, adjusting the generator and discriminator until the model learns to create high-quality pictures that match the input text prompts. Once trained, the StyleGAN-T model may be used to create pictures in response to new text cues. The word prompt and a random latent vector are fed into the generator, which creates visuals that fit the written description.

StyleGAN-T Use Cases

Data Augmentation

In computer vision, there are times when an image dataset used to train a model is too tiny or lacks variation.

Image processing is frequently used to produce fresh versions of photographs by making minor adjustments to the images. However, this strategy frequently leads to model overfitting (particularly when the picture dataset is limited). The model is basically viewing the same images again and over. StyleGAN-T might be used to broaden the picture collection and produce more diverse synthetic images.

Gaming

Many video games are based in fictitious settings with fictitious characters and things. StyleGAN-T may be used to create these fictitious locations, monsters, and products.

Design, Art

You may have seen the enormous number of creative elements that StyleGAN-T can make while reading this tutorial and examining the photographs. StyleGAN-T pictures' inventiveness and aesthetics might be used for design projects, art projects, and so on. The options are limitless.

How To Start Using StyleGAN-T

The StyleGAN-T paper's training code is accessible on GitHub. There are instructions for preparing your data in the proper format.

There is a training script included that may be used to train the model on various datasets. The training command parameters may be changed to suit your dataset, picture resolution, batch size, and training period. You can resume training from a previously trained checkpoint by providing the checkpoint path.

The Nvidia Source Code Licence governs the StyleGAN-T repository.

Conclusion

StyleGAN-T is an advanced text-to-image generation model that integrates natural language processing with computer vision. StyleGAN-T improves on earlier StyleGAN versions and competes with diffusion models in terms of efficiency and performance.

To produce high-quality pictures based on text prompts, the StyleGAN-T model employs a GAN architecture and a pre-trained CLIP text encoder. Data augmentation, e-commerce, gaming, fashion, design, and art all have applications for StyleGAN-T.

Learn more about SKY ENGINE AI offering

To get more information on synthetic data, tools, methods, technology check out the following resources:

- Telecommunication equipment AI-driven detection using drones

- A talk on Ray tracing for Deep Learning to generate synthetic data at GTC 2020 by Jakub Pietrzak, CTO SKY ENGINE AI (Free registration required)

- Example synthetic data videos generated in SKY ENGINE AI platform to accelerate AI models training

- Presentation on using synthetic data for building 5G network performance optimization platform

- Working example with code for synthetic data generation and AI models training for team-based sport analytics in SKY ENGINE AI platform