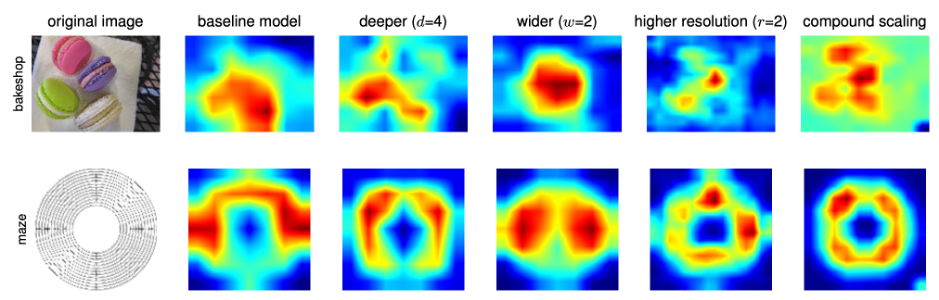

EfficientNet class activation map (Source: https://arxiv.org/pdf/1905.11946.pdf)

EfficientNet, which was introduced in 2019 by a team of Google AI researchers, quickly became a go-to architecture for many difficult tasks, including object identification, picture segmentation, and even language processing. Its effectiveness arises from its ability to strike a compromise between two crucial deep learning factors: computational efficiency and model performance.

Traditional deep learning algorithms frequently trade off accuracy for resource use. EfficientNet overcomes this issue with an innovative method known as "compound scaling."

EfficientNet reaches remarkable levels of efficiency without sacrificing accuracy by methodically increasing the model's dimensions (width, depth, and resolution). This strategy enables the model to establish an ideal balance, allowing it to adapt to different computational budgets and hardware capabilities.

In this article, we will look at the design of EfficientNet, the technical specifics of compound scaling, and how compound scaling has changed the area of deep learning.

What is EfficientNet?

EfficientNet is a convolutional neural network that is based on the notion of "compound scaling." This idea tackles the age-old trade-off between model size, accuracy, and computing efficiency. Compound scaling is intended to scale three critical aspects of a neural network: breadth, depth, and resolution.

- Width: The number of channels in each layer of the neural network is referred to as width scaling. By enlarging the width, the model may capture more complicated patterns and characteristics, resulting in higher accuracy. Reducing the width, on the other hand, results in a more lightweight AI model that is ideal for low-resource applications.

- Depth: The total number of layers in the network is referred to as depth scaling. Deeper models can capture more complex data representations, but they also need more computing resources. Shallower models, on the other hand, are more computationally efficient but may forfeit accuracy.

- Resolution: Resolution scaling entails changing the size of the input image. Higher-resolution images give more detailed information, which may result in improved performance. They do, however, need larger memory and processing power. Lower-resolution images, on the other hand, use fewer resources but may lose fine-grained information.

The chart below depicts the effect of scaling means across multiple dimensions.

Scaling Network Width for Different Baseline Networks. Each dot in a line denotes a model with a different width coefficient (w). The first baseline network (d=1.0, r=1.0) has 18 convolutional layers with a resolution of 224x224, while the last baseline (d=2.0, r=1.3) has 36 layers with a resolution of 299x299. (Source: https://arxiv.org/pdf/1905.11946.pdf)

One of EfficientNet's strengths is its ability to balance all three aspects using a principled method. Beginning with a baseline model, the researchers conduct a systematic grid search to determine the best combination of width, depth, and resolution. This search is directed by a compound coefficient designated as "phi" that evenly scales the model's dimensions. This number serves as a user-defined parameter that defines the overall complexity and resource needs of the model.

How Compound Scaling Works

Several versions of StyleGAN have been released during the last five years. The architecture has improved incrementally with each iteration. The timeline below outlines the evolution of StyleGAN over the years.

The procedure starts with a baseline model that acts as a starting point. This baseline model is often a decently large neural network that does well on a particular task but is not optimised for computational efficiency.

Then, as a user-defined parameter, a compound coefficient is provided to determine how much to scale the neural network's dimensions. It is a single scalar number that evenly adjusts the model's breadth, depth, and resolution. The overall complexity and resource needs of the model may be modified by altering the phi value.

Dimensions are scaled from here. The fundamental principle underlying compound scaling is to scale the baseline model's dimensions (width, depth, and resolution) in a balanced and coordinated manner. The compound coefficient phi is used to calculate the scaling factors for each dimension.

- Width scaling: The neural network's width is proportionately scaled by increasing phi to the power of a specified exponent (usually denoted as alpha).

- Depth scaling: Similarly, the network's depth is increased by increasing phi to another exponent (usually represented as beta).

- Resolution scaling: The initial resolution (r) is multiplied by phi increased to a variable exponent (typically designated as gamma) to adjust the resolution or input picture size.

The optimal exponents must then be established. The exponents alpha, beta, and gamma are constants that must be established in order to get the best scaling. These exponents are often calculated using an empirical grid search or optimisation procedure. The objective is to find the exponent combination that yields the optimal trade-off between model accuracy and computing efficiency.

After determining the scaling factors for width, depth, and resolution, they are applied to the baseline model. The EfficientNet with a given phi value is now the outcome.

Researchers and practitioners can select from a variety of EfficientNet models, each corresponding to a particular phi value, depending on the unique use case and available computing resources. Smaller phi values result in lighter, more resource-efficient models, whereas bigger phi values result in more powerful, but computationally costly, models.

EfficientNet can effectively explore a large range of model topologies that achieve the right balance between accuracy and resource consumption by using the compound scaling approach. EfficientNet's extraordinary ability to scale successfully has made it a game-changer in the field of deep learning, enabling state-of-the-art performance on a variety of computer vision applications while being flexible to a variety of hardware restrictions.

EfficientNet Architecture

Mobile Inverted Bottleneck (MBConv) layers, which are a mix of depth-wise separable convolutions and inverted residual blocks, are used by EfficientNet. Furthermore, the model design employs the Squeeze-and-Excitation (SE) optimisation to improve the model's performance.

EfficientNet Architecture (Source: https://www.researchgate.net/figure/Architecture-of-EfficientNet-B0-with-MBConv-as-Basic-building-blocks_fig4_344410350)

Architecture of EfficientUNet with EfficientNet-B0 framework for semantic segmentation. Blocks of EfficientNet-B0 as encoder (Source: https://www.researchgate.net/figure/Architecture-of-EfficientNet-B0-with-MBConv-as-Basic-building-blocks_fig4_344410350)

The MBConv layer is a critical component of the EfficientNet design. It is inspired on MobileNetV2's inverted residual blocks, but with various changes.

The MBConv layer begins with a depth-wise convolution, then a point-wise convolution (1x1 convolution) that increases the number of channels, and lastly another 1x1 convolution that decreases the number of channels back to the original number. This bottleneck design enables the model to learn quickly while retaining a high level of representational capability.

In addition to MBConv layers, EfficientNet includes the SE block, which teaches the model to focus on important characteristics while suppressing less important ones. The SE block employs global average pooling to compress the feature map's spatial dimensions to a single channel, which is then followed by two fully linked layers.

These layers enable the model to learn channel-wise feature relationships and generate attention weights, which are multiplied by the original feature map to highlight significant information.

EfficientNet is available in several forms with differing scaling coefficients, such as EfficientNet-B0, EfficientNet-B1, and so on. Each version reflects a distinct trade-off between model size and accuracy, allowing users to choose the best model variant for their needs.

EfficientNet Performance

The EfficientNet curve is marked in red in the figure below. The model size is represented on the horizontal axis, while the accuracy rate is represented on the vertical axis. A quick glance at this chart demonstrates EfficientNet's capabilities. In terms of accuracy, EfficientNet outperforms its predecessors by a mere 0.1%, narrowly surpassing the previous state-of-the-art model, GPipe.

Model size vs ImageNet accuracy (Source: https://arxiv.org/pdf/1905.11946.pdf)

The approach used to obtain this precision is noteworthy. While GPipe uses 556 million parameters, EfficientNet uses just 66 million - a significant difference. In practise, the tiny 0.1% increase in accuracy may go unnoticed. The amazing eightfold boost in speed, on the other hand, considerably improves the network's usability and potential for real-world industrial applications.

Conclusion

EfficientNet's compound scaling technique influenced our understanding of the trade-off between efficiency and accuracy in deep learning. It provides diverse models that are adaptive to varied hardware restrictions by intelligently scaling width, depth, and resolution.

The lightweight and sturdy design of the architecture, together with Mobile Inverted Bottleneck layers and Squeeze-and-Excitation optimisation, reliably offers outstanding performance across a wide range of computer vision workloads.

Learn more about SKY ENGINE AI offering

To get more information on synthetic data, tools, methods, technology check out the following resources:

- Telecommunication equipment AI-driven detection using drones

- A talk on Ray tracing for Deep Learning to generate synthetic data at GTC 2020 by Jakub Pietrzak, CTO SKY ENGINE AI (Free registration required)

- Example synthetic data videos generated in SKY ENGINE AI platform to accelerate AI models training

- Presentation on using synthetic data for building 5G network performance optimization platform

- Working example with code for synthetic data generation and AI models training for team-based sport analytics in SKY ENGINE AI platform