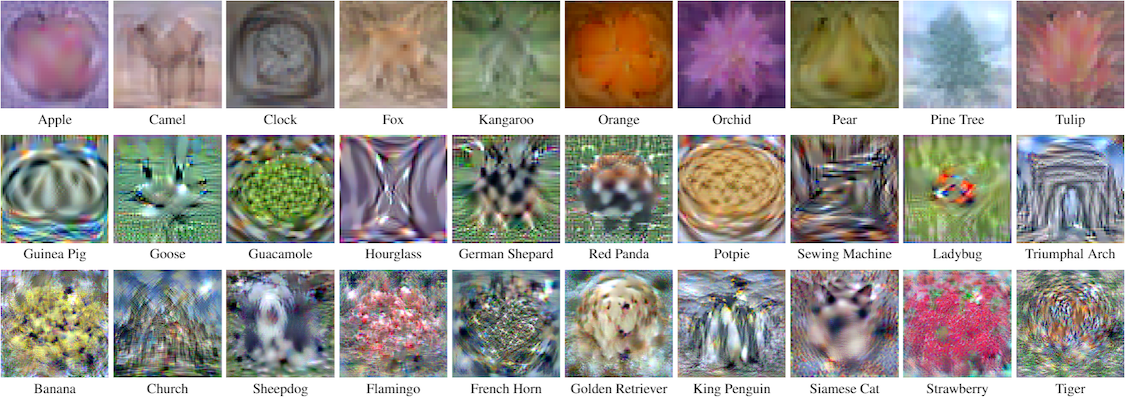

Dataset distillation by matching trajectories (Source: https://georgecazenavette.github.io/mtt-distillation/)

Large-scale datasets have become the foundation for training accurate and resilient machine learning algorithms. However, as datasets become larger and more complex, training on the full dataset can become computationally expensive and time-consuming. This is where dataset distillation comes in, providing a method for reducing the processing needs of the training process while retaining critical information.

The process of choosing a subset of data samples that capture the most essential and representative aspects of the original dataset is referred to as dataset distillation. We hope to do this by condensing the dataset into a smaller, streamlined form that preserves the statistical features and generalisation capabilities of its bigger counterpart.

A visual representation of the dataset distillation process. Dataset distillation aims to generate a small informative dataset such that the models trained on these samples have similar test performance to those trained on the original dataset. (Source: https://arxiv.org/pdf/2301.07014.pdf)

What is Dataset Distillation?

Dataset distillation is a machine learning approach that involves picking a representative subset of data samples from a larger dataset while keeping the original dataset's key information and statistical features. The purpose of dataset distillation is to generate a smaller and more manageable version of the dataset while retaining the main properties required for training machine learning models.

The computing requirements (i.e. cost) for training machine learning models can be decreased by distilling a dataset while preserving or even boosting performance. This is especially useful in resource-constrained contexts, projects with large-scale datasets, or when iterations and experimentation must be completed quickly.

Several steps are involved in the dataset distillation process:

- Data analysis: The original dataset is analysed as the initial stage in dataset distillation. Understanding the data distribution, finding relevant traits, and deciding the main qualities that must be kept in the distilled dataset are all part of this approach.

- Sample selection: Following the completion of the data analysis, the next step is to pick a subset of samples that reflect the original dataset. Various selection approaches, such as instance selection, prototype selection, and representative sample selection, can be used. These strategies seek to choose samples that reflect the original data's diversity, representativeness, and relevance.

- Selection criteria: Specific criteria are used to pick samples for the distilled dataset. Depending on the aims of the dataset distillation process, these criteria may differ. For example, diversity requirements try to guarantee that the selected samples represent a wide range of data space areas. Representativeness requirements guarantee that the chosen samples are representative of the original dataset's distribution. Relevance criteria are concerned with picking samples that are pertinent to the topic at hand.

- Distilling Knowledge: Various ways to selecting representative samples can be used by dataset distillation techniques, as detailed below.

- Evaluation and Validation: Following the creation of the distilled dataset, it is critical to assess the dataset's success in developing a robust model. This evaluation entails determining if the distilled dataset preserves the original dataset's essential statistical traits, generalisation capabilities, and performance qualities. The validated distilled dataset should be compared to the performance of models trained on the entire dataset using acceptable assessment metrics.

The success of dataset distillation is determined by the job at hand, the original dataset's quality, the selection criteria, and the distillation techniques used. To ensure the dataset's utility and trustworthiness, it is critical to thoroughly analyse it, pick appropriate selection procedures, and assess the performance of models trained on the distilled dataset.

In the next part, we will look at three contemporary dataset distillation methodologies: Performance Matching, Distribution Matching, and Parameter Matching. We will investigate each strategy and assess its efficacy in producing distilled datasets.

Methodologies for Dataset Distillation

Data distillation approaches are categorised into three major types depending on distinct optimisation objectives: performance matching, parameter matching, and distribution matching.

Each category includes a number of ways. For each category, we will offer a description of two ways. The figure below depicts the categories of data distillation.

Categories of dataset distillation.

Performance Matching

Performance matching is a methodology that aims to train a smaller, distilled model to match the performance of a bigger, more complicated model. The goal is to transmit the bigger model's knowledge and capabilities to the smaller model while retaining equivalent levels of performance on a given job.

In performance matching, the distilled model is trained using a dataset created from the bigger model's predictions. The bigger model is used to predict outcomes from a huge dataset. These predictions are then utilised to refine the dataset. The distilled dataset includes the input samples as well as the labels or targets created by the bigger model.

The distilled dataset is then used to train a "distilled model" utilising approaches such as knowledge distillation or teacher-student learning. The objective is to train the distilled model to make predictions similar to the bigger model on the same dataset, matching its performance.

It is conceivable to deploy smaller and more efficient models that can yet achieve comparable performance to their bigger counterparts by achieving performance matching through dataset distillation. This is especially useful in resource-constrained situations, such as mobile devices or edge computing scenarios, where it is important to reduce model size and processing needs.

Meta-Learning

Meta-Learning, which comes under Performance Matching, tries to train a smaller model to match the performance of a bigger model by exploiting meta-learning concepts.

The idea is to teach a model how to fast learn or adapt to new tasks or datasets. During the training phase, the model is exposed to a wide range of tasks, with each task regarded as a unique learning issue.

Meta-learning-based strategies for dataset distillation and performance matching entail training a meta-model or meta-learner on a set of tasks. Each job is made up of a dataset and a target or label. The meta-learner is trained to produce a smaller, distilled model that can easily adapt to new datasets and perform similarly to the bigger model.

The meta-learner learns to optimise the parameters or beginning circumstances of the distillation model throughout the training process so that it may efficiently generalise across multiple challenges. This entails determining the best initialization, architecture, or hyperparameters for the distilled model, allowing it to train quickly with little quantities of data.

When the meta-learning process is finished, the distilled model may be deployed and used to forecast new datasets or tasks.

Meta learning based performance matching. The bi-level learning framework aims to optimize the meta test loss on the real dataset T , for the model meta-trained on the synthetic dataset S. (Source: https://arxiv.org/pdf/2301.07014.pdf)

Kernel Ridge Regression

In dataset distillation, the Kernel ridge regression falls under the area of Performance Matching. It entails using kernel ridge regression concepts to train a smaller model that matches the performance of a bigger model.

The ideas of ridge regression and kernel approaches are combined in kernel ridge regression. It is widely used for regression problems involving the prediction of a continuous target variable. Kernel ridge regression may be used to transfer information from a bigger model to a smaller one in the setting of dataset distillation.

The method begins with training the bigger model using a dataset that acts as the knowledge source. The bigger model is used to forecast the dataset, resulting in a set of intermediate representations or features. These intermediary representations of data capture essential patterns or information.

Following that, the intermediate representations and target labels are utilised to train a kernel ridge regression model. Ridge regression's kernel function captures non-linear correlations between input characteristics and the target variable. The kernel ridge regression model, which represents the smaller model, learns to generalise from the intermediate representations and delivers predictions that match the performance of the bigger model.

Distribution Matching

In dataset distillation, distribution matching refers to an approach that seeks to align the data distribution between a larger, source dataset and a smaller, target dataset. The objective is for the target dataset to include the same statistical traits and characteristics as the source dataset.

Distribution matching is critical in dataset distillation since the performance of a machine learning model is dependent on the data it is trained on. If the target dataset does not correctly represent the distribution of the source dataset, the model may fail to generalise and perform accurately on previously unknown data. By matching the data distribution, the distilled model trained on the target dataset may generalise better and perform similarly to the bigger model on the same task or problem domain.

When transferring knowledge from a large, labelled dataset to a smaller, unlabeled dataset, distribution matching techniques are especially useful, allowing the distilled model to benefit from the wealth of information present in the source dataset while being more computationally efficient and resource-friendly.

Distribution matching. It matches statistics of features in some networks for synthetic and real datasets. (Source: https://arxiv.org/pdf/2301.07014.pdf)

Single Layer

Distribution through a Single Layer Matching is the process of matching the distribution of data at a single layer of a neural network. Making sure the two datasets' representations are identical aids in capturing the underlying statistical traits and attributes of the source dataset in the target dataset.

Several strategies may be used to accomplish Single Layer distribution matching. One typical strategy is to insert an extra loss term during the training process, which promotes the source and target dataset representations to be comparable at the designated layer.

This extra loss phrase can be expressed in a variety of ways. To quantify the dissimilarity between the distributions of the source and target representations at the selected layer, one popular way is to use a discrepancy metric, such as the maximum mean discrepancy (MMD) or the Kullback-Leibler (KL) divergence. The network is subsequently trained to minimise the disparity, therefore aligning the data distributions.

Another option is to utilise adversarial learning, in which a different discriminator network is inserted at each layer to discriminate between the representations of the source and target datasets. The network is taught to "fool" the discriminator by creating representations that are difficult to differentiate as source or target. This adversarial training aids in the alignment of the two datasets' distributions.

Multi Layer

The goal of Multi Layer Distribution Matching is to match the distribution of data across various levels of a neural network design. The Multi Layer approach focuses on matching the representations of the source and target datasets at numerous levels of the neural network, rather than just one.

To accomplish Multi Layer distribution matching, a variety of strategies can be used. One popular strategy is to employ domain adaptation methods that work at numerous levels at the same time. These approaches seek to learn transformations or mappings that reduce the distribution disparity between the source and target information over numerous network levels.

Domain adversarial training is a prominent approach in Multi Layer distribution matching. An extra domain classifier or discriminator is included in this technique, with the goal of distinguishing between the representations of the source and target datasets at many layers. After that, the neural network is trained to minimise the disparity between the representations while also deceiving the domain discriminator.

Another method is to utilise gradient reversal layers, which reverse the gradients that flow back from the domain discriminator during training. By encouraging the network to develop representations that are indistinguishable from the source and target datasets, this reversal aligns the distributions.

It's worth noting that both Single Layer and Multi Layer distribution matching algorithms have benefits and may be more appropriate depending on the dataset, job, and network design. The decision between the two methodologies is determined by criteria such as dataset complexity, neural network depth, and the desired level of distribution alignment required for optimal knowledge transfer.

Parameter Matching

Parameter Matching is a mechanism for matching the parameters or model weights of a larger source model with a smaller target model. The objective is to guarantee that the smaller model captures the same or comparable parameter values as the bigger model, transmitting the larger model's knowledge and capabilities to the smaller one.

Parameter matching can be accomplished using a variety of approaches. Knowledge distillation is a popular strategy. The bigger model, known as the teacher model, is trained on a labelled dataset and its predictions are used as soft goals for training the smaller model, known as the student model, in knowledge distillation.

During the training phase, the student model is optimised to minimise a distillation loss in order to imitate the predictions of the instructor model. This loss function compares the instructor model's projected probabilities or logits against those of the student model. By matching the outputs of the two models, the student model learns to capture the instructor model's knowledge and decision-making process, even with fewer parameters.

Parameter initialization is another way for parameter matching. The smaller model is initialised with the bigger model's parameter values in this manner. The training process then refines these parameters on the target dataset, allowing the smaller model to converge to a solution that is comparable to the bigger model.

Parameter matching is especially effective when computing resources are few, such as when deploying models on edge devices or mobile platforms.

Single-Step

In a single step or stage, Single-Step Parameter Matching attempts to match the parameters of a smaller target model to those of a larger source model.

The Single-Step technique focuses on immediately matching the parameters of the source and target models, with no intermediary stages or iterations. The objective is to efficiently transfer the information and capabilities gathered by the bigger model to the smaller model.

Single-step parameter matching (Source: https://arxiv.org/pdf/2301.07014.pdf)

Multiple strategies may be used to achieve Single-Step parameter matching. A common method is to utilise the parameter values from the bigger model to initialise the parameters of the smaller model. This initialization allows the smaller model to start with the same parameter values as the bigger model, giving a solid foundation for training.

Following the initialization of the parameters, the smaller model is trained on the target dataset using typical optimisation procedures such as gradient descent. The parameters of the smaller model are changed during training to minimise a task-specific loss function based on the intended task or aim.

The parameters of the source and target models are aligned in a single training session. The objective is to guarantee that the smaller model captures the same information and decision-making skills as the bigger model, albeit having fewer parameters.

Single-step parameter matching might be useful when there is a need for a speedy transfer of information and there is a limited computational budget or training resources. It provides an effective method for matching parameters and improving performance in the smaller model.

However, the success of Single-Step parameter matching is dependent on parameters such as job difficulty, similarity between the source and target datasets, and model size difference. More advanced approaches, such as Multi-Step parameter matching, may be required in some circumstances to get greater alignment and performance in the distilled model.

Multi-Step

Through numerous iterative phases or stages, Multi-Step Parameter Matching attempts to match the parameters of a smaller target model to those of a larger source model.

The Multi-Step technique focuses on gradually aligning the parameters between the source and target models by iteratively updating the target model's parameters based on the source model's information. This repeated procedure allows for more fine-grained knowledge transmission and improves model alignment.

Using actual and synthetic data, Multi-Step Parameter Matching optimises the consistency of learned model parameters. It is also known as matching training trajectories for Multi-Step parameter matching. (Source: https://arxiv.org/pdf/2301.07014.pdf)

Multi-Step Parameter Matching may be accomplished using a variety of ways. Fine-tuning is a popular technique in which the smaller target model is trained on the target dataset using either random or pre-trained beginning parameter values. Following initial training, the target model's parameters are repeatedly updated in succeeding phases, using information from the larger source model.

Multi-Step matching, as opposed to Single-Step matching, is a more iterative procedure. Instead of a single initialization step, the parameters of the smaller model are gradually adjusted and aligned with those of the bigger model over time. This iterative procedure may include fine-tuning, transfer learning, or other optimisation approaches to gradually refine the parameter values of the smaller model until they nearly match those of the bigger model.

The smaller target model may gradually acquire the knowledge and decision-making skills of the bigger source model by using Multi-Step parameter matching in dataset distillation. This method allows for increased knowledge transfer, which leads to better performance on the objective job.

Dataset Distillation Applications

Computer Vision/Vision AI

Object identification, semantic segmentation, and picture classification can all benefit from dataset distillation. Let us look into the advantages of dataset distillation in each of these areas:

- Object detection: Dataset distillation can enhance object identification models by transferring information from a larger to a smaller item detection dataset. The smaller dataset can benefit from the bigger model's rich representations, various object instances, and contextual information by distilling the knowledge. This can result in better object detection accuracy, resilience, and the capacity to deal with a broader range of item types and variants.

- Semantic segmentation: By transferring information from a bigger dataset including labelled segmentation masks, dataset distillation can improve semantic segmentation models. The distilled dataset can capture the bigger dataset's geographical context, object boundaries, and semantic information. The segmentation model can increase its capacity to reliably recognise and separate distinct objects and areas in photos by training on this condensed dataset.

- Image Classification: Dataset distillation can help image classification models by transferring information from a bigger dataset with a variety of picture classifications. The distilled dataset can capture the bigger model's discriminative characteristics, visual patterns, and decision boundaries. The picture classification model can increase its accuracy, generalisation capabilities, and efficiency by training on this condensed dataset.

In all three situations, dataset distillation enables the transfer of valuable information from a bigger dataset to a smaller one. Smaller models can use the learnt representations, patterns, and contextual information through this process, which enhances performance, generalisation, and efficiency. Additionally, by producing a more concentrated and representative training dataset, dataset distillation can solve issues like few labelled data, class imbalance, or noisy samples.

Neural Architecture Search

Automating the process of finding the best neural network topologies for a particular job is Neural Architecture Search (NAS). The search process in NAS may be made more effective and efficient by using dataset distillation.

The evaluation and comparison of various neural network topologies in NAS frequently calls for a significant amount of computing resources. By extracting the knowledge from a huge dataset and condensing it into a smaller, more representative dataset, dataset distillation might assist ease this computational strain. Using this reduced dataset will speed up the examination and iteration of various designs throughout the architecture search process.

The smaller distilled dataset captures the key patterns, statistical features, and variety of the original dataset by distilling the knowledge from a bigger dataset. Because of this, the NAS algorithm can explore and assess a variety of network configurations while operating with a smaller computing budget. Additionally, the generalisation and transferability of the identified designs can be enhanced by dataset distillation.

Knowledge Distillation

To increase the efficiency of knowledge distillation, dataset distillation may be used as a component of the process. The student model can be trained on a distilled dataset that captures the key patterns, correlations, and properties of the original dataset rather than the actual dataset itself. Using methods like density matching, distribution matching, or other methods that try to capture the information encoded in the instructor model, this condensed dataset may be produced.

The generic teacher-student framework for knowledge distillation. (Source: https://arxiv.org/pdf/2006.05525.pdf)

The student model can gain information from the teacher model while being trained on a more condensed and targeted collection of samples by using the distilled dataset to train it. This may result in a student model with better generalisation, less overfitting, and faster training convergence.

Additionally, training on large-scale datasets has computational needs and memory restrictions that can be lessened by employing a distilled dataset. Without having to analyse and store the complete dataset, it enables the student model to extract the most important variables from the original dataset.

Conclusion

We looked at the notion of dataset distillation and how it works. The process of transferring information from a bigger dataset to a smaller one allows models to profit from the useful insights and patterns captured in the larger dataset while overcoming computational and memory restrictions.

We covered three major dataset distillation methodologies: Performance Matching, Distribution Matching, and Parameter Matching.

Meta-Learning and Kernel Ridge Regression (KRR) are two strategies for matching the performance of the distilled model with the bigger model. Distribution Matching approaches include Single Layer and Multi Layer, with the purpose of matching the data distribution between the distilled and original datasets. Parameter Matching approaches include Single-Step and Multi-Step, which try to match the model parameters between the distilled and bigger models.

In addition, we investigated the numerous applications of dataset distillation in various domains. In computer vision, dataset distillation can be used to improve model performance, efficiency, and generalisation for tasks such as object identification, semantic segmentation, and picture classification.

By utilising the knowledge hidden in bigger datasets, dataset distillation provides a potent way to improving model performance, efficiency, and generalisation. It has considerable promise in a variety of domains and activities, allowing models to attain cutting-edge outcomes while incurring lower computing costs.

Learn more about SKY ENGINE AI offering

To get more information on synthetic data, tools, methods, technology check out the following resources:

- Telecommunication equipment AI-driven detection using drones

- A talk on Ray tracing for Deep Learning to generate synthetic data at GTC 2020 by Jakub Pietrzak, CTO SKY ENGINE AI (Free registration required)

- Example synthetic data videos generated in SKY ENGINE AI platform to accelerate AI models training

- Presentation on using synthetic data for building 5G network performance optimization platform

- Working example with code for synthetic data generation and AI models training for team-based sport analytics in SKY ENGINE AI platform